Building Brunch

When GPT-3 came out in late 2020, I started exploring a bunch of prototypes on how we can build adaptive user interfaces. The idea being that how we generate interfaces can (at least for a brief period in history) afford to be steered by language models that do not need strict scaffolding to generate bits of personalized interfaces.

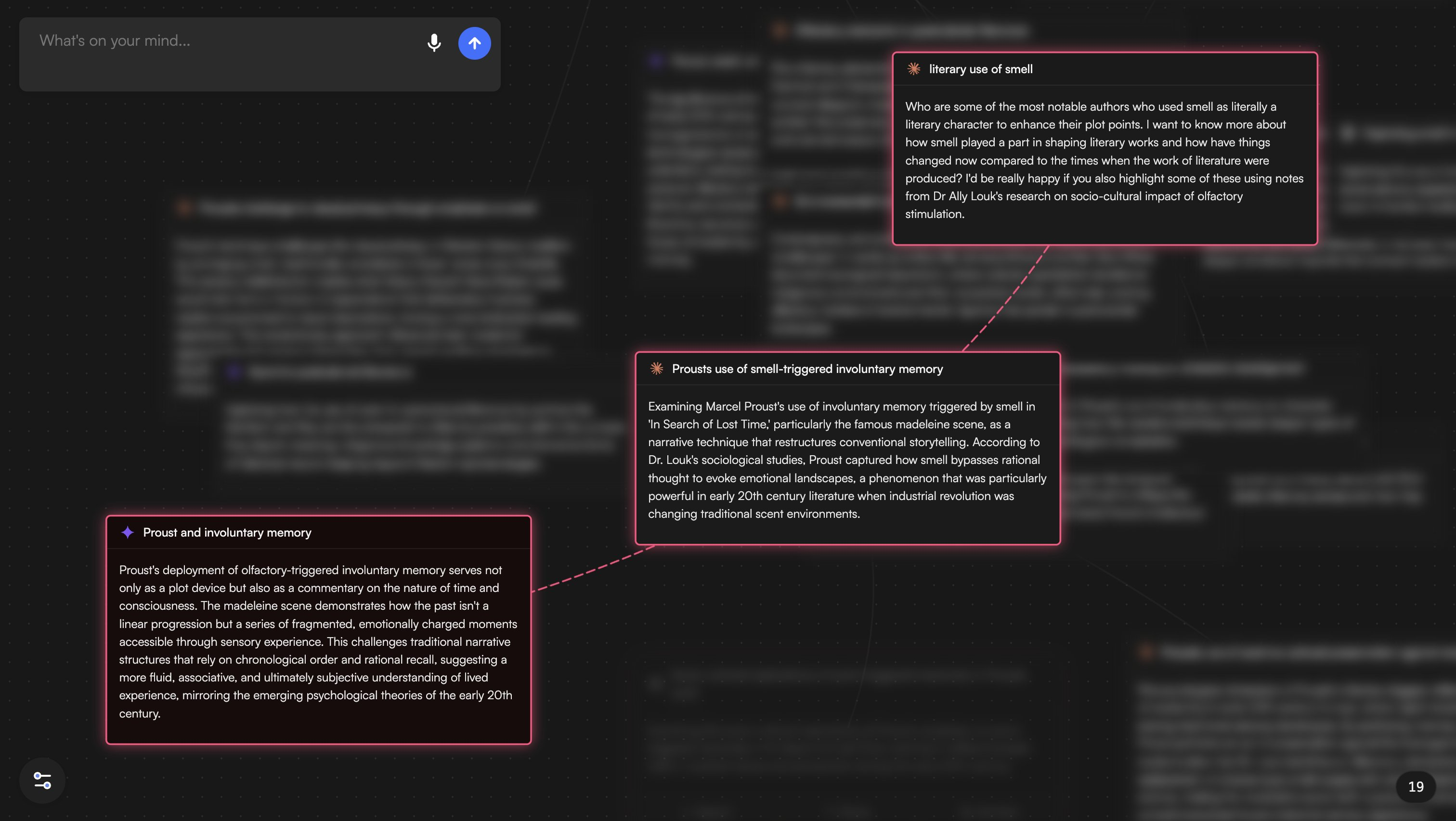

After ChatGPT came out, technology circles erupted with the debate on whether a chat is the best interface to become the anvil of shaping the thoughts of an LLM. Part of the discourse was because chat is sort of a power steering for structuring indeterministic outs, and part was whether is a correct representation on how we explore our thoughts. As humans, we don’t think in a linear fashion, we go in different directions and then we go off in some more branches until probably some of the branches interwine and converge back with the main root, creating the seemingly perfect thought that makes things condense to a circle.

While working with multiple LLMs and exploring and structuring ideas, it became cumbersome to switch between multiple models and multiple tabs, copy pasting ideas from one tab to the other and passing context between multiple tabs became frustrating. Hence, I started building Brunch⎯ a graphical node-based interface to watch exchanges between different instances of GPT-3, each one initialized with a different prompt to encourage divergence of outputs. For instance, one API call has a system prompt to make it think more along the good/moral side of things : the proverbial angel to steer your thoughts and ideas in generally a good direction, while another has a system prompt set to make it act like the devil.

This experiment evolved to then use multiple language models along with a few more interesting ideas baked in along the way.

In 2025, a lot of these ideas/optimizations are no longer novel, but exploring these ideas was fun a couple of years back, and this essay deals with explaining some of them, and how they evolved over these years.

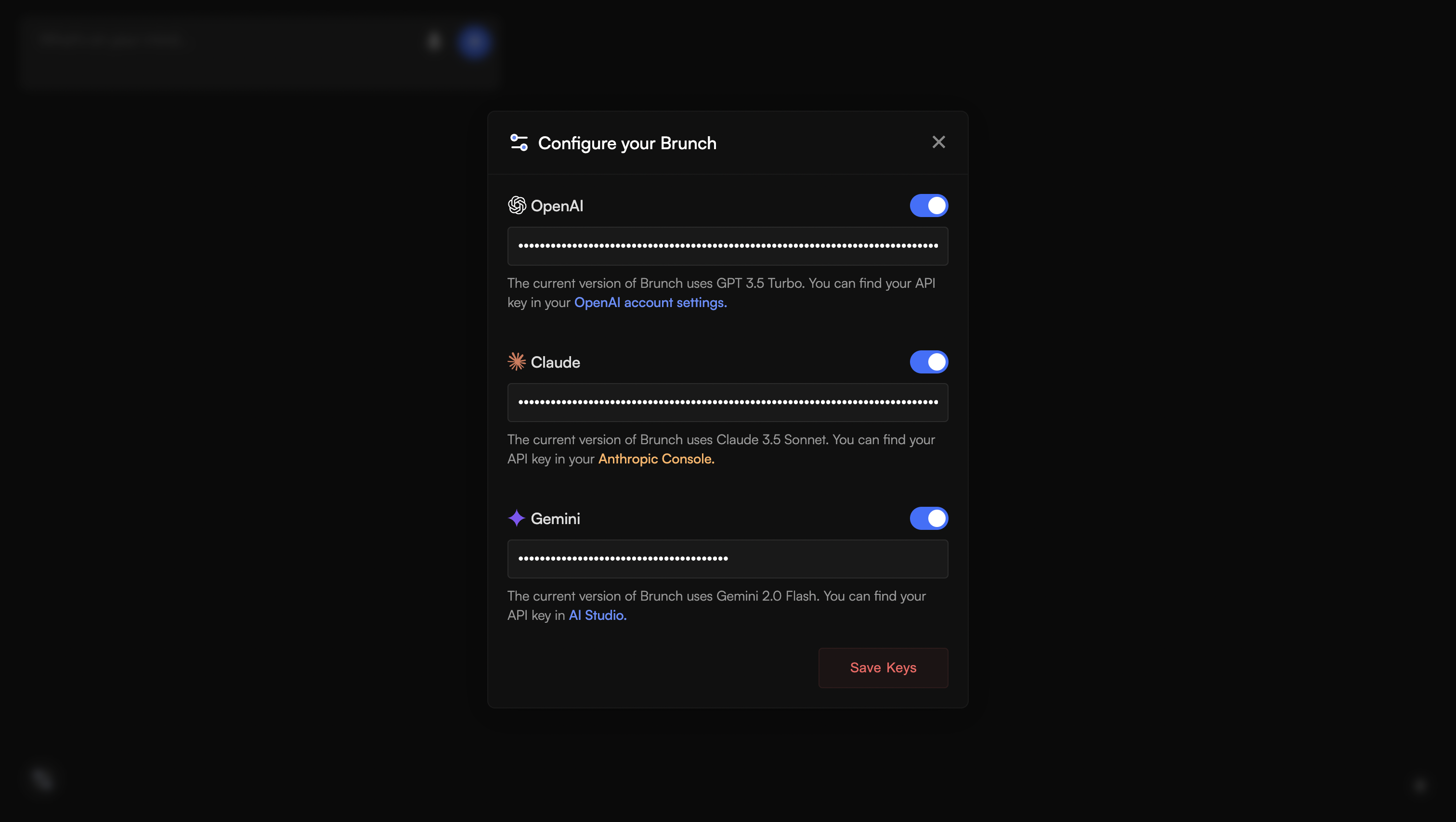

Freedom to select a model

When I started experimenting with LLMs and a deluge of them came out all at once, it became imperative for me that regardless of what tool or experiment I build, it needs to come with the freedom of selecting and using the models I want, mixing and inter-mingling them seamlessly without having to constantly switch tabs. And while these affordances to activate a model eases things significantly, I added another way to actually use them while you are working with LLMs.

Which brings us to…

Implicit Invocation

What if you could invoke any model (on in an earlier case : different instances of GPT-3 itself linked with different aliases) by simply adding the name string in the prompt. So if my instance is aliased “Coffee”, I can simply add the text, “Hey Coffee, I want you to…” and it would specifically be “Coffee” responding to my prompt.

It was earlier simply scanning the input string to find the relevant substring and then routing the API call to that relevant instance.

While rewriting the older codebase for Brunch, I added a few optimizations on top of the basic string detection.

- I added another instance of GPT 3.5 tuned to be a classifier, I pass a system prompt that outlines what each of the model is strong at, and then automatically returns a single string from the set of language models that had been added to the system prompt. If if the instance “feels” that Claude is the best model to answer the question, it should simply output

claude, or if Gemini can handle the question well, it should simply give megemini.

- And now, based on the output string, we use a simple switch case to route the input prompt to the suitable LLM API.

Smart Clustering

This behaviour is still slightly flaky, but one instance of GPT 3.5 keeps scanning all the cards/thoughts/extensions generated and arranges them into contextual smart clusters, naming the cluster with a context-relevant string.

Add Context

Before expanding a thought or creating more adjacent thoughts, you can now add a separate custom string to steer the conversation in any direction that you like.

You can add additional prompts and have more granular control on how you can steer the conversation.

Chain of Thought

When you are exploring the outputs of multiple LLMs across a bunch of diverse and scattered thoughts, it becomes important to have an ability to trace how the thought evolved. This bit was really fun as it required some pretty gnarly javascript wrangling to ensure it works as intended but when you click on a specific thought/card, it triggers a recursive lookup for parent cards until the traversal reaches the very root card which obviously wouldn’t have any more parent attached to it.

Brunch was entirely written in vanilla HTML, CSS, and Javascript. Significant chunks of the chain of thought traversal logic was courtesy Claude (and honestly, 3.7 Sonnet is the best model to give you comparatively cleaner, nicely modularized code).

It was fun to add voice input and smaller fun bits like The machines are thinking....

You can take Brunch for a spin at ⎯ brunch.shuvam.xyz

Feel free to send your thoughts over on Bluesky or Twitter 🖖